Data Migration to the Cloud

A field-tested playbook for moving application data without breaking the business (or your sleep). No drama, just data.

Executive Summary

This white paper sets out a practical, governance-safe approach to migrating application data to the cloud. It focuses on outcomes over theatre: discover what exists, design before you build, rehearse until cutover is boring, and reconcile until the auditors are smiling. The tone is straight-talking with professional wryness—the kind that keeps meetings short and results long-lived.

- Goal: Put the right data in the right cloud in a way that is legal, auditable, and cost-aware.

- Method: Discover → Design → Prepare → Migrate → Cutover → Stabilise.

- Guardrails: Clear ownership rules (including time-sliced edge cases), privacy & security by default, automated quality controls, and rollback that actually rolls back.

Contents

- Purpose & Scope

- Approach Overview

- Governance & Guardrails

- Phased Delivery

- Data Disposition Catalogue

- Migration Patterns

- Case Example: The “Janey Job-Hopper” Problem

- Controls & Assurance

- Cutover Playbook

- Roles & Responsibilities

- Worked Mini-Examples

- Definition of Done

- Risks & Antidotes

- Reference Architecture (Mental Model)

- Appendix: Glossary

1) Purpose & Scope

Audience: CIOs, programme leads, data architects, platform engineers, and compliance teams who like a plan that survives contact with reality.

In scope: Application data migrations to public cloud platforms; patterns from “lift & shift” to refactor; selective and time-sliced extraction for divestments; assurance and handover.

Out of scope: Full application modernisation, non-data infra migration specifics, and anything that requires a séance with a vendor EULA.

2) Approach Overview

The delivery spine is simple: Discover → Design → Prepare → Migrate → Cutover → Stabilise. Measure twice, cut once, reconcile always.

Discover

Non-technical: Inventory apps and their data like a house move: what’s coming, what’s going to storage, what’s going to charity.

Technical: Catalogue domains, systems, tables, SLAs, RPO/RTO, PII/PHI, CRUD patterns, CDC, lineage hot spots.

Design

Non-technical: Decide what to lift, re-platform, refactor, split, or retire. Agree how to prove success.

Technical: Patterns; target stores; SCD; data contracts; keys; reconciliation; security by design.

Prepare

Non-technical: Fix the ugly stuff early so it doesn’t become “live” ugly.

Technical: Profiling, DQ fixes, reference alignment; build landing zones, IAM; pipelines & IaC.

Migrate

Non-technical: Rehearse until boring—then do it for real.

Technical: Dry/mock runs; CDC to shrink window; automated validation (counts, sums, checksums, KPIs).

Cutover

Non-technical: Calm hands, clear comms, cake afterwards.

Technical: Freezes; final delta; flip endpoints; cache warm; health checks; optional dual-run.

Stabilise

Non-technical: Prove it works; retire scaffolding; hand to BAU.

Technical: Post-cutover validation, tuning, budgets/alerts; decommission legacy; update lineage; BAU runbooks.

3) Governance & Guardrails

- Ownership rules: codified, time-sliced where needed (policy IDs versioned and auditable).

- Security & privacy: KMS/CMKs, encryption at rest/in transit, masking/tokenisation for lower environments, least-privilege IAM.

- Quality gates: Null %, referential integrity, ranges, regex masks—automated.

- Cost controls: Budgets, tagging, alerts, auto-suspend/scale; FinOps review baked into BAU.

4) Phased Delivery (What We Actually Do)

Phase A — Discover

Non-technical: Inventory apps and data; decide what travels, what is stored, and what is retired.

Technical: Catalogue domains/systems/tables/volumes/SLAs; map CRUD/CDC/API limits; identify master vs transactional vs analytical; sketch lineage.

Outputs: Data inventory, sensitivity tags, migration candidates with effort/benefit.

Phase B — Design

Non-technical: Choose the fate of each dataset; agree the definition of done.

Technical: Select patterns (snapshot, CDC, API, files); define target storage, partitioning & retention; contracts & schemas; SCD, keys; reconciliation; security design.

Outputs: Migration design per app/dataset; acceptance criteria; runbooks; test & reconciliation packs.

Phase C — Prepare

Non-technical: Remove surprises; keep the good ones for birthdays.

Technical: Profiling & DQ fixes; reference alignment; dedupe; build landing zones, IAM, VNET/Private Link, secrets; pipelines & IaC.

Outputs: Cleaned data slices; ready pipelines; non-prod envs; rehearsal plan.

Phase D — Migrate

Non-technical: Rehearse, rehearse, rehearse. Then do it for real.

Technical: Dry runs → mock runs → dress rehearsal with cutover timings; incremental syncs (CDC); automated validation (counts, sums, distincts, checksums, KPIs).

Outputs: Signed rehearsal results; tuned timings; tested rollback.

Phase E — Cutover

Non-technical: Communicate the window and contingency; nominate who brings cake.

Technical: Freeze where needed; final delta; flip endpoints; warm caches; verify health; optional dual-run.

Outputs: Go/No-Go record; cutover log; first-day checks; on-call rota.

Phase F — Stabilise

Non-technical: Prove it works, document it, hand to BAU.

Technical: Post-cutover validation; performance tuning; cost guardrails/alerts; retire legacy; update lineage & catalog; BAU handover.

Outputs: Signed acceptance; decommission plan complete; BAU ownership.

5) Data Disposition Catalogue

| Option | Non-technical description | Technical description | Example | Data pitfalls |

|---|---|---|---|---|

| Lift & Shift (Live) | Move it as-is, change the postcode. | Rehost DB to managed service; keep schema; minimal refactor. | VM-based app DB → managed Postgres. | Platform constraints; collation/timezone mismatches. |

| Re-platform | Same furniture, better house. | Self-managed DB → cloud-native equivalent. | On-prem Oracle → Cloud SQL/Autonomous. | Feature gaps; driver incompatibilities. |

| Refactor | Teach the data new tricks. | Break monolith into domain stores, events, lakehouse layers. | Orders → Bronze/Silver/Gold + CDC. | Key management & SCD complexity. |

| Selective Split | Only take what you’re entitled to. | Time-slice/entity-slice extraction with rules. | Divestment by company code + effective dates. | Ownership ambiguity; late-arriving facts. |

| Archive & Retire | Box it, label it, keep it legal. | WORM storage + index; searchable offline. | Closed app with 7-year retention. | Poor indexing → discovery pain. |

| Virtualise (Interim) | Read there, compute here. | External tables/views over remote storage. | Data sharing to analytics. | Latency & cost surprises. |

| Replace (Greenfield) | New system, migrate what matters. | Canonicalise → map → load into target SaaS. | Legacy CRM → Dynamics 365. | Loss of historic semantics. |

6) Migration Patterns

- Full Snapshot + Cutover — Small/medium data, low change rate. Simple, larger window.

- Snapshot + CDC Catch-up — High change rate, tight window. Practice-friendly, precise cutover.

- API-Led Trickle — SaaS with good APIs. Idempotent upserts; mind the throttling.

- File-Based Batch — Big, predictable nightly windows. Checksum everything.

- Event Rebuild — When you have a reliable event log. Elegant—if events actually exist.

- Dual-Run Bridge — Risky systems. Write to old and new; reconcile; then switch off old writes.

7) Case Example — The “Janey Job-Hopper” Problem

Scenario (plain English): Janey sells a blue widget on 2 March while employed by Company B; the customer pays on 30 March after Janey has transferred to Company C. In a divestment, Company B gets both the sale and the related payment because the sale was initiated during B-employment.

Implementation (technical):

- Model

Employment(effective_from, effective_to, company_code)andTransaction(initiated_ts, completed_ts, employee_id). - Ownership rule:

CASE WHEN initiated_ts BETWEEN emp.effective_from AND emp.effective_to THEN emp.company_code END - Version rule with a policy ID for audit; store provenance.

- Reconciliation: totals by company before/after split; variance ≤ agreed threshold.

8) Controls & Assurance

| Control | What it proves | How |

|---|---|---|

| Reconciliation | No loss or duplication. | Row counts; sums; distinct business keys; UI parity sampling. |

| Lineage | Explainability. | Data catalogue; column-level lineage; commit hashes. |

| Quality gates | Fitness to load. | Null %, range, referential checks, regex masks. |

| Security | Privacy by design. | KMS/CMKs/HSMs; IAM least privilege; masked lower envs. |

| Cost guardrails | Spend under control. | Budgets; alerts; auto-suspend/scale; tagging; FinOps review. |

9) Cutover Playbook (T-Timeline)

- T-14 days: Final mock. Sign timings, rollback, owners.

- T-3 days: Freeze catalogue changes; pin versions.

- T-1 day: CDC lag < agreed threshold; warm caches.

- T-0: Freeze writes (where needed); final delta; flip endpoints; smoke tests; publish “green board”.

- T+1–7: Dual-run (if used); daily reconciliations; release old writes; transition to BAU.

10) Roles & Responsibilities (Sane RACI)

- Data Lead (you): scope, standards, acceptance, arbitration.

- App Owner: source truth, test scenarios, sign-off.

- Security/Privacy: DPIA, keys, masking, access.

- Platform: accounts, networks, policies, observability.

- Engineers: pipelines, tests, IaC, runbooks.

- Ops/Support: steady-state, alerts, “who you gonna call”.

11) Worked Mini-Examples

1) Payroll to Cloud DW (Snapshot + CDC)

Non-technical: First copy everything, then keep it in sync until payday.

Technical: Full extract → staged parquet; Debezium/Kafka/CT-based CDC; SCD2 for employees; row-level security by org.

Checks: Employee counts per org; net pay totals; variance < £1 per 10k records.

2) Legacy CRM → SaaS (API-Led Replace)

Non-technical: Move customers with their history; skip the 2008 zombie leads.

Technical: Canonical IDs; throttle API; idempotency keys for upserts; archive “zombies” to WORM with an index.

Checks: Customers by segment; opportunities by stage; random UI spot checks.

3) Manufacturing Orders to Lakehouse (Refactor)

Non-technical: Turn the shed of CSVs into a tidy pantry: raw shelf, cleaned shelf, serving shelf.

Technical: Bronze (raw) → Silver (validated) → Gold (facts/dims); SCD2 on product & plant; partition by order_date; Z-order on customer_id.

Checks: Order totals by month/plant; join completeness across dims.

12) Definition of Done

- All acceptance tests pass (counts, sums, samples).

- Lineage & catalogue updated; DPIA complete; least-privilege access verified.

- Runbooks, alerts, SLOs in place; budget alarms set.

- Legacy feeds turned off; archive searchable; owners named.

13) Risks & Antidotes

| Risk | Why it bites | Antidote |

|---|---|---|

| Ownership ambiguity | Divestments, transfers, late payments. | Time-sliced rules + policy IDs; legal sign-off; audit columns. |

| API throttling | SaaS protects itself. | Back-off, chunking, idempotency. |

| Schema drift | Source keeps changing. | Contract tests; schema registry; versioned pipelines. |

| Privacy leakage | Lower envs get real data. | Masking/tokenisation; synthetic datasets. |

| Cost sprawl | “Just one more cluster”. | Budgets; auto-suspend; tagging; FinOps review. |

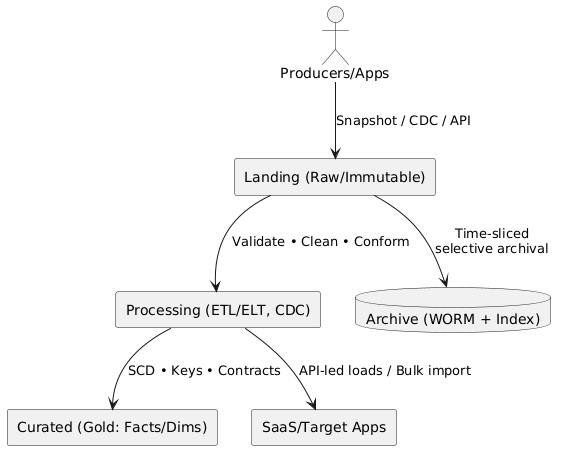

14) Reference Architecture (Mental Model)

@startuml skinparam componentStyle rectangle actor "Producers/Apps" as Src rectangle "Landing (Raw/Immutable)" as L rectangle "Processing (ETL/ELT, CDC)" as P rectangle "Curated (Gold: Facts/Dims)" as C rectangle "SaaS/Target Apps" as T database "Archive (WORM + Index)" as A Src --> L : Snapshot / CDC / API L --> P : Validate • Clean • Conform P --> C : SCD • Keys • Contracts P --> T : API-led loads / Bulk import L --> A : Time-sliced\nselective archival @enduml

Note: Include as PlantUML in engineering docs; compile to an image for presentations.

15) Appendix — Glossary

- BAU: Business-as-usual (steady-state operations).

- CDC: Change Data Capture — streaming or log-based deltas since last snapshot.

- DPIA: Data Protection Impact Assessment.

- Lakehouse: Data lake + warehouse traits (Bronze/Silver/Gold).

- RACI: Responsible, Accountable, Consulted, Informed.

- SCD: Slowly Changing Dimension (Type 2 tracks history).

- SLA/RPO/RTO: Service/Recovery objectives that define availability and restore posture.

- WORM: Write Once, Read Many (immutable storage).

Reference Architecture from section 14 (PlantUML)